Today, after months of criticism from users, activist groups, and former employees, Twitter is rolling out new product and policy updates in an attempt to combat the harassment, hate speech, and trolling that has plagued the platform for a decade.

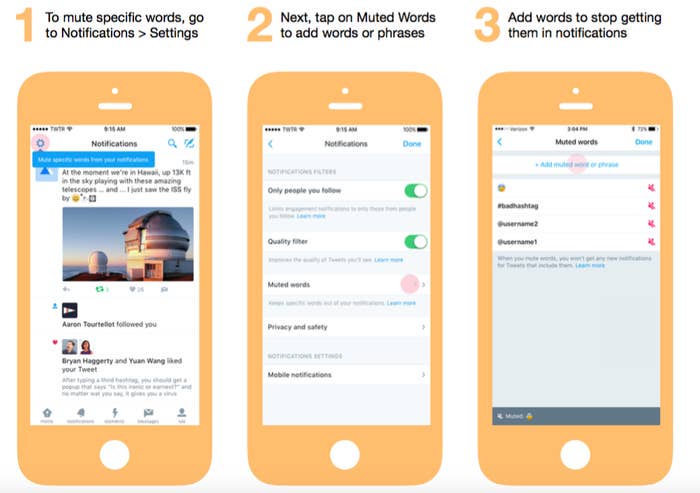

On the product end, Twitter has augmented its mute feature to allow users to filter specific phrases, keywords, and hashtags, similar to what's found on Instagram, which added a keyword filter this September. The feature was widely believed to be close to completion late last month after Twitter temporarily rolled out a test of the mute filter to select users.

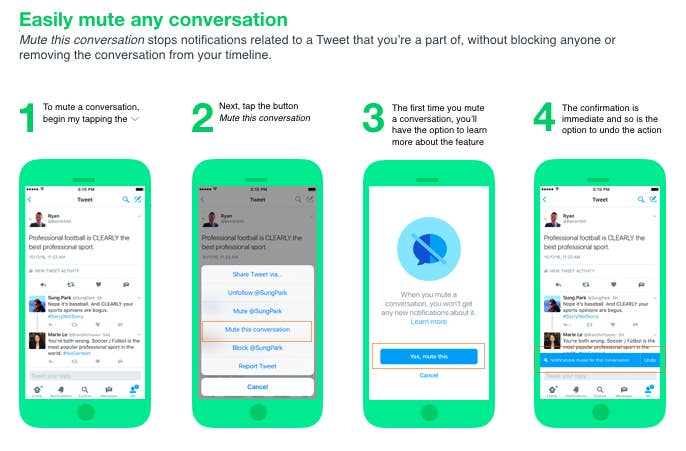

But while the test resembled a standard keyword filter, Twitter’s new mute tool will go a step further, allowing users to mute entire conversation threads. This will allow users to stop receiving notifications from a specific Twitter thread without removing the thread from your timeline or blocking any users. And according to Twitter, you’ll only be able to mute conversations that relate to a tweet you’re included in (where your handle is mentioned).

For now, the product update appears to be centered on the notification experience, which has been a minefield for victims of serial harassment on the platform. While a mute feature has long been called for by those targeted by Twitter’s brutish underbelly, it's also largely cosmetic — it hides abuse instead of fixing it. Although expanded mute tools will attempt to shield users from a deluge of unwanted interactions, the feature will do little to stop the underlying harassment itself.

As such, Twitter also announced it will add a new “hateful conduct” reporting option (when users report an “abusive or harmful” tweet, they’ll now see an option for “directing hate against a race, religion, gender, or orientation”). Similarly, the company is adding new “extensive” internal training for its support teams that deal with hateful harassment. According to the company, its Safety team support staff will undergo “special sessions on cultural and historical contextualization of hateful conduct” as well as refresher programs that will track how hate speech and abuse evolve on the platform (a necessary step, as many trolls have begun to create their own hateful code language with which to bypass traditional censors and filters).

For victims of abuse, the new reporting flow will allow bystanders to report abuse on behalf of other users. More importantly, it will provide context — and perhaps urgency — to the safety personnel reviewing the abuse reports.

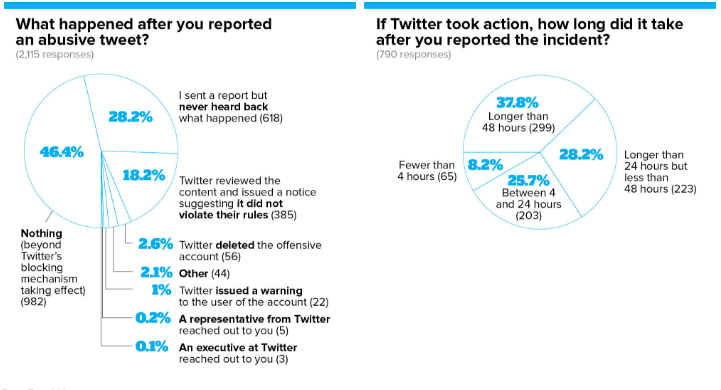

For Twitter, these reporting changes come after a rash of criticism from many of its most devoted users who feel marginalized by Twitter’s failure to respond to reports. Throughout its first decade, Twitter’s protocols for addressing abuse have been largely opaque, and targets of harassment are frequently in the dark about whether their appeals for help were heard. In September, a BuzzFeed News survey of over 2,700 users found that 90% of respondents said that Twitter didn’t do anything when they reported abuse, despite the alleged violations of Twitter’s rules, which prohibit tweets involving violent threats, harassment, and hateful conduct. As one victim of serial harassment told BuzzFeed News following the survey, “It only adds to the humiliation when you pour your heart out and you get an automated message saying, ‘We don’t consider this offensive enough.’”

The company has also been criticized for being slow to respond to cases unless they go viral or are flagged by celebrities, public figures, or journalists. In August, the company told Kelly Ellis, a software engineer from California, that the 70 tweets calling her a “psychotic man hating ‘feminist’” and wishing that she’d be raped did not violate company rules. They were were subsequently taken down shortly after a BuzzFeed News report.

And as recently as last week, the company was slow to block attempts by well-known alt-right trolls to suppress minority voter turnout by photoshopping fake Clinton campaign ads that encouraged users to “vote from home.” In response, Twitter CEO Jack Dorsey told BuzzFeed News that he was “not sure how this slipped past us, but now it’s fixed.” Four days later, BuzzFeed News found dozens of examples of similar Trump troll tweets across the site.

But the new tools for users to proactively shield themselves — coupled with a staff equipped to deal with the volume and complexity of indefatigable, constantly evolving trolls — may be a heartening sign that the company is at last serious about taking on the problems of abuse and harassment that have plagued its platform.

The new updates come at a critical time for Twitter: For months, the company has fought against stagnant growth, declining stock, and an exodus of leadership, including, just last week, COO Adam Bain. The company’s failure to curb abuse has turned the platform into a primary destination for trolls and hate groups — a reputation that reportedly drove away potential buyers, including Salesforce and Disney this summer. Throughout the 2016 presidential race, Twitter’s role as the social network of choice for the alt-right has left the platform increasingly toxic to women and minority groups. Last month, the Anti-Defamation League released a report citing a “significant uptick” in anti-Semitic harassment toward journalists. The study showed roughly 2.6 million anti-Semitic tweets, creating more than 10 billion impressions across the web between August 2015 and July 2016. The words most frequently found in the bios of the users sending those tweets? “‘Trump,’ ‘nationalist,’ ‘conservative,’ ‘American,’ and ‘white.’”

Now, facing a deeply divisive presidency, Twitter’s safety team may face an unprecedented test and heavy scrutiny. Just days into Trump's victory, alt-right trolls across the internet are gearing up for an ideological war, fought with false information and aggressive harassment on Twitter.

The question now is whether Twitter can still reverse the damage done by trolls to save the platform that was arguably the most significant and vital platform for news and of this recent election cycle. In a blog post on the new abuse changes, the company affirmed its commitment to “rapidly improving Twitter based on everything we observe and learn,” a credo that, if it holds true, could begin to earn back trust. But perhaps just as illustrative is the caveat in the sentence before: “We don't expect these announcements to suddenly remove abusive conduct from Twitter. No single action by us would do that.”